Europe Faces the AI Revolution

A big-picture view

Artificial intelligence is already transforming our societies and economies. The technology is currently being used for machine translation, identification of cancers (radiology), loans and insurance risk assessment, writing of classical music, and generation of creepy images (try out DALL-E mini yourself, it’s fun!). This is only the beginning: applications of AI will continue to intensify and expand to new areas, with profound and uncertain implications the future of work, politics, and meaning in human life.

The AI revolution has been driven especially by researchers and companies in the United States of America and China. However, AI has risen to increasing prominence on the European agenda. The European Union is eager to play catch up and set regulatory standards for human-centered AI under the twin objectives of promoting “excellence and trust” in AI.

Just last year, the European Commission proposed an AI Act which includes a framework for determining which types of AI should be allowed and under which conditions. For example, use of facial recognition AI by police forces would be restricted and AI for purposes of social credit scoring (as exists in China) would be banned. The legislation is currently being discussed by MEPs and national governments in Council.

Alongside this legislative and policy work, a tremendous amount of thinking is going on regarding the applications and implications of AI in just about every sector. For example, the European Parliament Research Service (EPRS) is publishing numerous studies on AI examining the impact in areas like healthcare, transport, data, propaganda, and much else. As EPRS targets educated laymen – namely politicians and their staff – its publications are often the best place to start to get a handle on an EU policy topic.

The European Commission has also been extremely active. The EU executive created AI Watch in 2018 as a specialized service monitoring the development, uptake, and impact of AI in Europe. AI Watch and the broader Joint Research Centre (JRC) - the Commission’s scientific research service - have already produced hundreds of studies dealing with AI, often very detailed.

A big-picture view of AI

Discussion of AI often enters territory traditionally occupied by radical science fiction. There is much speculation about the prospects for the creation of a self-improving Artificial General Intelligence (AGI) which would rapidly achieve god-like qualities. Whatever the likelihood of this, either in the immediate or long term, AI provokes and calls for stimulating outside-the-box thinking. AI in particular calls for what Lee Kuan Yew called “helicopter quality,” meaning the ability to zoom in and out on an issue so as to “see the broad picture and have the ability to focus on relevant details.”1

An example of such institutional big-picture thinking is provided by Vladimir Šucha and Jean-Philippe Gammel’s Humans and Societies in the Age of AI, published by the Commission last year. The authors are high-level EU officials, Šucha being the former head of JRC and Gammel the current director-general for human resources. Drawing from input from over 30 experts, the study provides a bird-eyes view of the many social, economic, and political issues posed by AI.

In contrast to most sociological and political discourse today, much discussion of AI is evolutionarily-informed and grounded in a realistic understanding of the emergence of human nature and its unfixed character. The human species’ spectacular evolutionary success in conquering Earth’s extraordinarily diverse land environments is fundamentally based in our uniquely developed capacity for collective intelligence:

Our uniquely high levels of individual intelligence.

Our capacity for social cooperation (by no means a uniquely human trait).

Our capacity to build upon the achievements of others (including, crucially, previous generations) through the sharing and passing on of information and practices through culture (enabling our ability to evolve socially far faster than genetically).

All this amounts to varying levels of collective social and intergenerational intelligence which have enabled the expansion of human populations across the globe, the development of complex human societies and civilizations, and our unsteady but ultimately exponential scientific, technological, and economic development.

As Šucha and Gammel note: “Until now, humans have been using their natural cognitive capacities given by genetic inheritance and formed by their natural environment.” AI may enable another exponential leap in collective intelligence on a par with the shift from genetic to cultural evolution.

Individual intelligence could be effectively boosted through the use of AI assistants and brain-computer interfaces. Cognitive neural prosthetics are already being used as mind-controlled artificial limbs, but such devices could also be used to mitigate cognitive impairments such as Alzheimer’s or autism.

The combination of genetic, digital, and other environmental data and the capacity to process them mean AI will come to know us better than we know ourselves:

The processing of large quantities of data, including individual genome data and information about people’s surrounding environment and variables will not only enable personalised treatments, but also very early preventive interventions. This will eventually raise the question of defining the limit between what is healing and what is upgrading humans with all the ethical and fairness issues this entails.

If the human-AI relationship is complementary, this could lead to an explosion of self-knowledge and resulting recommendations to improve our health and habits, and open up possibilities for human enhancement.

AI-human complementary could then be a huge enabler of human flourishing:

Human flourishing is traditionally defined as the state wherein an individual experiences positive emotion, acquires the abilities to achieve their own goals within themselves and with the external environment, and is able to interact positively with their social environment. Furthermore, our future should be characterised by a smooth human-AI cooperation and complementarity rather than competition. What we should aim at is not to try to run faster than the algorithms as is sometimes suggested, but to co-evolve and to grow together harmoniously.

More speculatively, and disturbing for many, AI could enable a posthuman future:

[Yuval] Harari or [Yann] Le Cun suggest that Homo sapiens is only one stage of the evolution and that we will maybe soon have to pass on the torch to entirely new types of entities. This should incite us to take the development of AI extremely seriously and to think about what kind of future we want for humankind.

Negative impacts: addiction, dependence, polarization

Whatever one thinks of transhumanist ambitions, the many existing negative consequences of the digital transition are suggestive of the potential problems further deployment of AI could cause:

Some first effects of the digital transformation can already be observed. There is wide consensus among experts that we can suffer from attention loss and the inability to concentrate and dive deep into problems because of the multiple disturbances generated by the digital environment. We also suffer from a greater impatience linked to our need of instant reactions and gratification, from reduced memory due to the instant availability of knowledge and recordings online, from a reduced capacity to orient ourselves in space, or from the incapacity to take decisions because we rely more and more on algorithms to decide for us. …

Research has also confirmed a causal link between our growing inability to concentrate and unhappiness.

AI could further worsen problems such as “behavioural addiction, strengthening biases, polarisation and radicalisation, or jobs obsolescence.”

While new technologies are generally empowering, this can also lead to unhealthy dependence, atrophy of previously used skills and capacities (e.g., being able to navigate a car without satnav), overstimulation, and addiction (already evident in the age of TV and video games, now algorithmically perfected as social media business models). No doubt many of us will have experienced some or all of these in the digital age.

I can personally think of a few things our tech giants should not (be allowed) to do:

Social media that have “push notifications” by default, informing you of unrelated posts as though these were interactions from other people. (Twitter still misleads me into thinking I have had an interaction through such notifications, despite me turning this “feature” off.)

Facebook’s extremely prominent “Stories/Reels” feature, designed to be extremely distracting and to encourage mindless scrolling between short videos. (Incidentally, should we get rid of Tiktok?)

Unrelated to AI, Amazon’s practically making invisible the “purchase without subscribing to Prime” option while making the Prime option seem like the only button during unrelated purchases. (Can lead to accidental subscription if you click through without being careful, potentially leading to months of bills.)

Besides algorithmic addiction and dependence, AI and data-gathering will enable the development of unprecedentedly intricate and intrusive digital dictatorships. The dictatorships of Zaïre, communist Romania, and East Germany were world-renowned for the degree to which they spied on their citizens. But their man-operated systems – listening in on phone calls, secretly reading letters – look downright primitive and lackadaisical compared to the routine and systematic digital surveillance in China and many Western countries today, notably since the War on Terror and the COVID crisis.

International and social inequalities

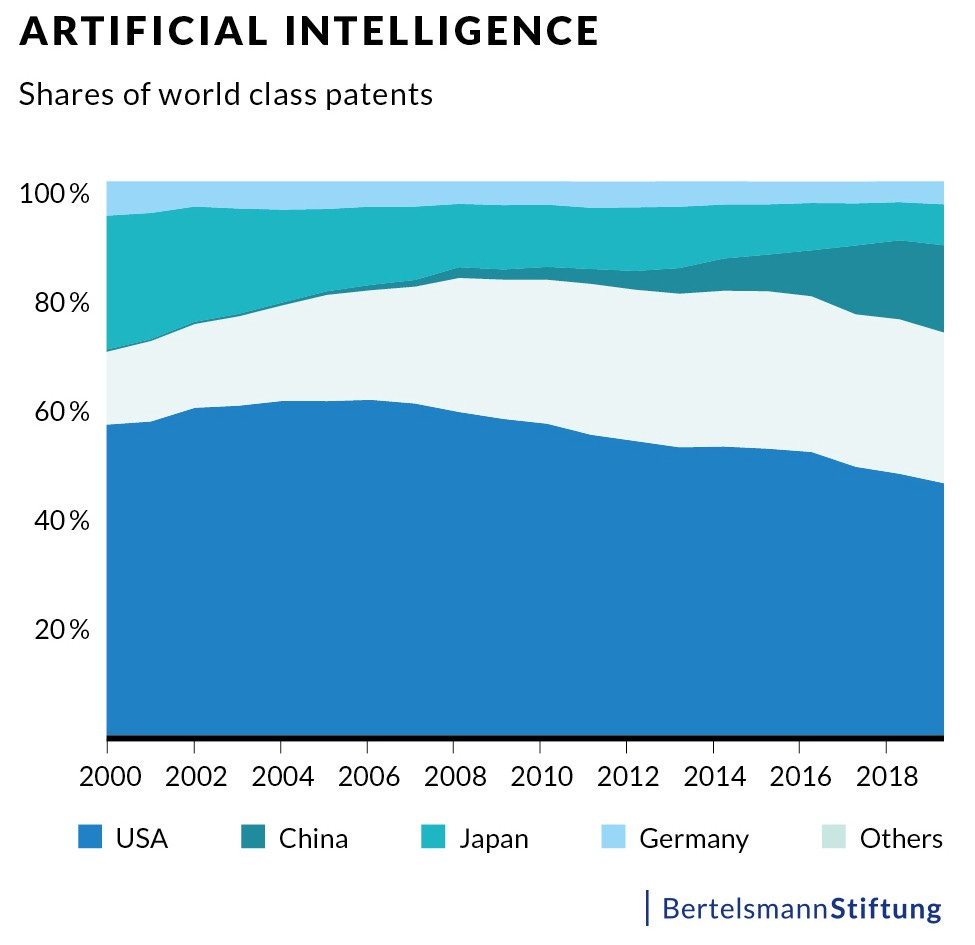

AI also has tremendous implications for global inequalities. The authors note that the bulk of AI patents come from the United States, Japan, and China, while “the Global South is not present at all in the patenting of AI related technologies.” AI is likely to worsen such inequalities as it gives a critical competitive edge to first-mover nations (see Mega-trends #2 and #5 in my previous post).

AI industries are likely to have winner-take-all dynamics similar to what we see in other parts of the tech sector. If, as seems likely, AI uptake and its applications to human enhancement - such as increasing individual intelligence - will be uneven, this could create “the most unequal societies that ever existed.”

While these concerns about inequality are legitimate, we should not forget that any increase in intelligence, even restricted initially to certain classes or regions, would radically increase nations’ and humanity’s collective intelligence and capacity to solve problems, including that of spreading the benefits of AI.

Over the course of the 20th Century, life expectancy doubled worldwide and poverty was massively reduced, essentially driven by the local application of technological, economic, and health innovations from the West and, to a lesser extent, East Asia. Human progress has never occurred without initial disparities caused by the fact breakthroughs initially occur in particular societies.

AI and cultural diversity

In addition to reinforcing geographical inequalities, AI will also interact in complex ways with the diversity of human civilizations. Various nations are unlikely to passively accept the AI norms adopted by the dominant players. As Šucha and Gammel note note:

Some of the codes that were developed mainly based on Western enlightenment principles are already challenged by experts from other parts of the world who claim that they are at odds with Confucian, Hindu or Islamic values. …

Cultural variations and differences in interpretations should not be underestimated either. One key issue is various trade-offs among different dimensions of well-being and ultimately also the trade-off between individual freedom and the common good.

Different societies will apply AI in different ways according to their conception and the importance they ascribe to various values, such as equality, freedom, excellence, the common good, truth, and virtue.

AI also has implications for cultural sovereignty. For example, algorithms recommending music and videos may reinforce the Western monoculture centered on Anglo-American entertainment industries.

In evolutionary terms, I would argue that real socio-cultural and biological diversity and experimentation are key to humanity’s future survival and flourishing as a species. Diversity and experimentation are the classic means of surviving Darwinian selection events and finding the best solutions to changing environmental conditions.

As Šucha and Gammel note, diversity is also important at the individual level in the face of stifling social conformism:

Diversity does not only concern groups but also individuals. Atypical, non-conformist or transgressive individuals triggered many of the discoveries and of the ‘avant-garde’ movements that have allowed humanity to move forward. We need to nurture difference and also to respect what happens in the margins of our societies. In addition, and rather counter-intuitively, many of our steps forward built on chance or initial mistakes or imperfections. One of the challenges in developing AI will therefore be to leave enough room for this type of unpredictability.

AI as enhancer or disabler of democracy and autonomy?

The impact of the digital transformation on liberal democracies has been mixed. On the one hand, it has enabled an explosion of political online subcultures and alternative media. Real ideological and political pluralism is much more pronounced today than in the days when political discussion was dominated by a few newspapers and national television stations.

The downside of this has been a breakdown of political consensus as many of the ideologies in this pluralist media-political space are viscerally incompatible (e.g., national-populism, liberal-globalism, and left-wokism). Online alternative media, the mainstream media’s refocusing on niche liberal/elite audiences, and social media algorithms’ catering content to users’ political buttons have all contributed to the creation of echo chambers and antagonistic subcultures.

How AI will interact with our democracies in the future is unclear. Šucha and Gammel suggest AI could enable to elites to stay in touch with grassroots sentiment:

AI systems could help to better sense citizens’ concerns and to take the emotional temperature in different geographic locations. This could then contribute to developing policy options that speak to people’s emotional needs and values, therefore leading to improved impacts.

On the other hand, will democracy remain meaningful if deferring decisions to AI becomes routine? Advocates of so-called “RoboGov” argued AI decision-makers would be “less hampered than human leaders by ideological extremism, tunnel vision, egotism and narcissistic tendencies.”

Leading thinkers on AI can be strikingly frank in their pessimism on the future of liberal democracy.

On the other hand, AI will become so good at making decisions for us that we will learn to trust it on more and more issues, running the risk that we gradually lose our ability to make decisions for ourselves. The conclusion Harari draws from this evolution is that democratic elections and free markets will make little sense in the future and that liberalism may collapse. …

Many experts we consulted therefore stressed that there is a high probability that traditional democratic politics will lose control of the events, decline and even disappear. What is at stake is therefore less to preserve liberal democracy as we know it, than to be able to reinvent new forms of democracy fit for the age of AI. Concrete suggestions concerning possible ways forward remain however scarce at this stage, but it should not prevent us from starting this important discussion.

I am struck that Harari - who since the publication of Sapiens in English in 2015 has become the hottest public intellectual among global elites - seems to have views on liberty and democracy closer to Curtis Yarvin (a.k.a. “Mencius Moldbug”) than what Davos attendees profess.2 Be that as it may, there is much work to be done to explore how AI can foster digital democracy, good government, and collective human flourishing.

Taking actions so AI fosters human flourishing

AI then presents unprecedented opportunities and risks for human flourishing. Even social media - rightly blamed for promoting attention-seeking narcissism and dopamine-hit overstimulation - offers much to people: “many consider that … that sharing experiences and emotions with other people actually enhances them. People enjoy being part of the dataflow because that makes them part of something much bigger than themselves.”

Šucha and Gammel suggest:

[I]ncentives could be created for platforms to better align with values that are explicitly chosen by their users. Those values might be for instance time well spent, promoting healthier democratic conversation, reducing environmental footprints, or other goals that come to be recognised in the years ahead.

It is not hard to imagine AI making better decisions than us in our personal life, such as whether to go jogging in the morning, what you should eat, or whether you should binge-watch some more TV. AI will also improve at making suggestions even on intimate matters, such as what to get your spouse for your anniversary (last Christmas was the first time I bought a family gift based on a Facebook ad).

Such recommendations or “nudging” should, in liberal societies, be based on explicit consent. But, beyond this, the challenge will be in reinforcing meaningful human autonomy in the strong sense, namely, using AI within a broader framework of decision-making in which we consciously and thoughtfully choose our own values and life-goals. As Šucha and Gammel note:

Some will be able to make a smart use of the new technologies that are developing in order to keep at least a certain level of free will and of autonomy, to take better decisions and to attain their goals.

It is not difficult to imagine a post-scarcity AI-dominated existence: most people staying at home, money periodically appearing on their bank account as part of their Universal Basic Income, regularly receiving packages from Amazon and HelloFresh or Deliveroo on their doorstep, and deferring decisions large and small to AI. Depending on one’s viewpoint, such a prospect could seem utopian or make human life seem utterly meaningless. Šucha and Gammel observe: “There is a great deal of scientific knowledge arguing that meaning and a sense of purpose are the cornerstones of a healthy mind band well-being.”

Šucha and Gammel also make a number of suggestions for how to approach AI going forward, including:

Research a meta-study on the cognitive, socio-emotional, and mental health impacts of digital transformation.

Map the intelligence landscape - including human, non-human animal, and artificial intelligences - in an Atlas of Intelligence.

Launch pilot projects to test a more systematic use of AI systems for policy impact assessments and evaluations. They also suggest “an experiment should also be carried out in close cooperation with video game developers to develop tools that would translate dry technical descriptions of the potential impacts of policy options and provide visual experiences of these impacts on countries, regions and communities to policymakers as well as to citizens in order to facilitate debate and decision-making.”

Create “customer defending entities” that would use AI to analyze customer data to counterbalance the analytic power of corporations and make suggestions on how to protect consumers.

In any event, this exciting field will only continue to grow in prominence and will present ever-great promise and challenges for European policymakers.

Lee Kuan Yew, From Third World to First: The Singapore Story: 1965-2000 (New York: HarperCollins, 2000), p. 473.

Consider Harari’s ruthless parody of the U.S. Declaration of Independence:

It is easy for us to accept that the division of people [by aristocratic societies] into “superiors” and “commoners” is a figment of the imagination. Yet the idea that all humans are equal is also a myth. In what sense do all humans equal one another? Is there any objective reality, outside the human imagination, in which we are truly equal? Are all humans equal to one another biologically? Let us try to translate the most famous line of the American Declaration of Independence into biological terms …

According to the science biology, people were not “created.” They have evolved. And they certainly did not evolve to be “equal.” The idea of equality is inextricably intertwined with the idea of creation. The Americans got the idea of equality from Christianity, which argues that every person has a divinely created soul, and that all souls are equal before God. However, if we do not believe in the Christian myths about God, creation, and souls, what does it mean that all people are “equal”? Evolution is based on difference, not equality. Every person carried a somewhat different genetic code, and is exposed from birth to different environmental influences. This leads to the development of different qualities that carry with them difference chances of survival. …

So here is that line from the Declaration of Independence translated into biological terms:

We hold these truths to be self-evident, that all men evolved differently, that they are born with certain mutable characteristics, and that among these are life and the pursuit of pleasure. (Yuval Harari, Sapiens [Penguin: London, 2014] pp. 122-123)

Want to join the AI art revolution?

I'm seriously thinking about starting my own business focused on customized AI art...